Key Takeaways:

- Ads are rolling out in free and Go ChatGPT tiers: OpenAI is testing clearly labeled, contextually targeted ads to subsidize access for users who don’t pay for subscriptions.

- Responses remain separate from advertising: Ads won’t influence ChatGPT’s answers, and OpenAI insists it will never sell user conversations to advertisers.

- Existing regulations may not fit: Lawmakers designed frameworks like the Digital Services Act (DSA) and Digital Markets Act (DMA) for traditional platforms, which leave gaps when it comes to conversational AI advertising.

- New rules needed: Governments may need to adapt or create policies to govern transparency, user protection, and accountability for conversational AI advertising.

It seems like OpenAI’s CEO, Sam Altman, has a new favorite sport: backpedaling. At an event at Harvard University in May 2024, he said that using advertising in ChatGPT would be a ‘last resort’ and that ‘ads plus AI is sort of uniquely unsettling.’

Well, things have since taken a rather drastic turn. On Friday, January 16, OpenAI announced that it’s testing impression-based advertising in ChatGPT with US users, and that this new feature will roll out to the free and Go tiers of the platform around February. This all points to a shift in how companies are monetizing generative AI, and it definitely raises concerns around transparency, data governance, and regulatory compliance.

And with any change comes questions:

- What does this mean for me as an everyday ChatGPT user?

- Is the large language model (LLM) going to use our data to serve personalized ads?

- How will we know what is an ad and what isn’t?

Let’s unpack what ChatGPT ads mean for users wanting to protect their sensitive information and lawmakers who may now need to adjust policies and governance around conversational AI.

How ChatGPT Advertising Will Actually Work

Before getting into regulation and data governance, it’s good to understand what OpenAI is rolling out and, importantly, what it isn’t. For now, OpenAI says it’s testing ads with a limited pool of US advertisers, each committing less than $1M.

Source: OpenAI

This isn’t a full-scale marketplace, but a controlled pilot that OpenAI designed to see how advertising fits into a conversational interface. Unlike performance-based ads, OpenAI is charging advertisers on a pay-per-impression basis, which means advertisers pay when ChatGPT shows an ad, not when a user clicks or buys anything.

From OpenAI’s perspective, this guarantees them revenue even if users ignore ads completely, which is a safer bet than experimenting with a brand-new ad format.

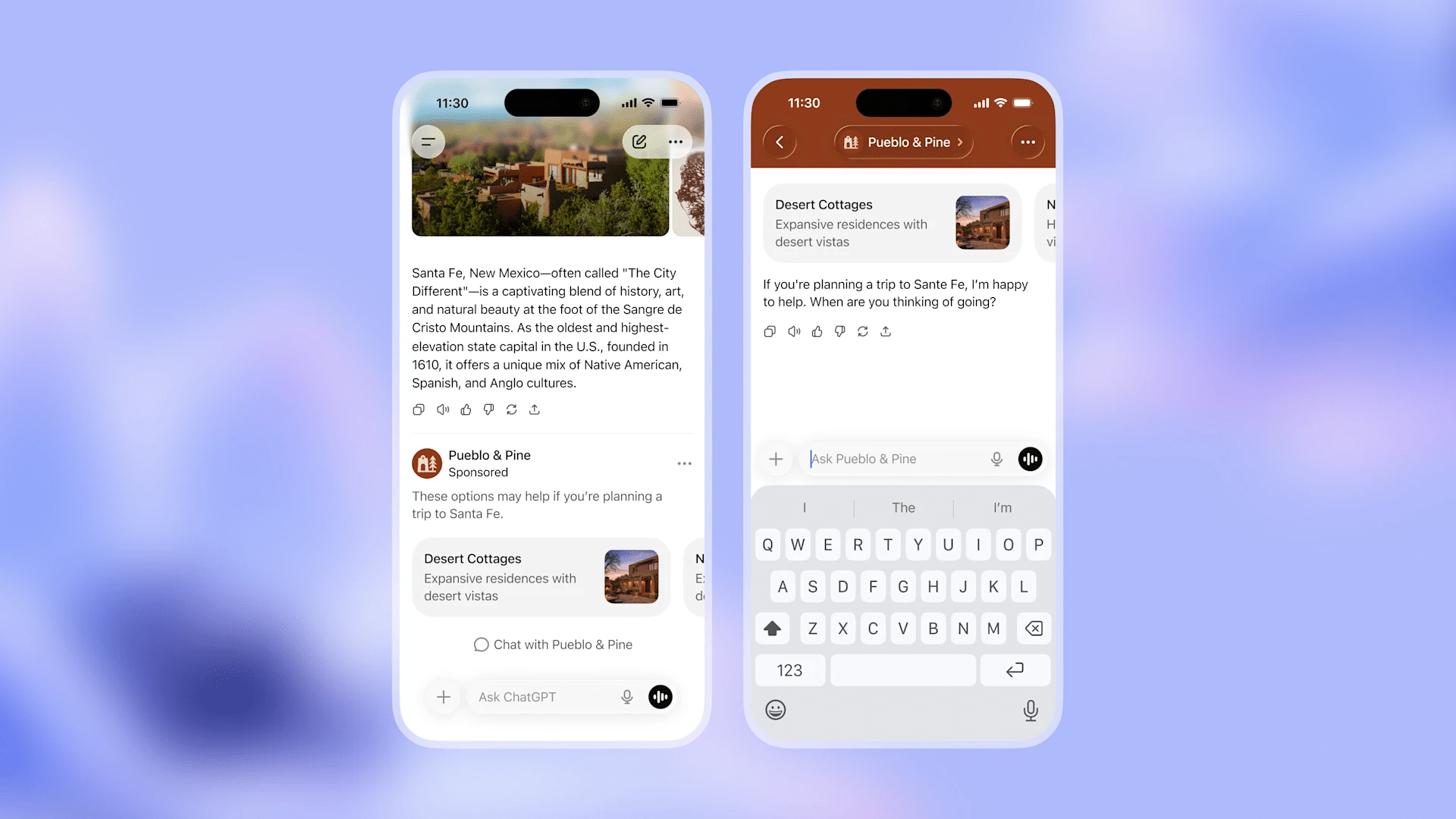

Ads will appear at the bottom of the ChatGPT interface, not within the answers themselves. And OpenAI will clearly label them and keep them visually separate from conversations.

Source: OpenAI

Although OpenAI is designing ChatGPT ads to display below conversations (at least for now), even that separation introduces new questions regulators may not have expected to answer. In its announcement, the company stated that ‘Ads do not influence the answers ChatGPT gives you.’ And it said that ‘Answers are optimized based on what’s most helpful to you. Ads are always separate and clearly labeled.’

Ads won’t be shown to Plus, Pro, or Enterprise users, which basically indicates that OpenAI is using this as a way to subsidize free and low-cost access to ChatGPT.

Why OpenAI Is Turning To Ads Now

Ads have popped up in numerous places for years, streaming services, social media, and web browsing being just a few, but why has OpenAI trialed this shift now?

- OpenAI says advertising helps keep ChatGPT accessible for free and low-cost users.

- Subscription revenue isn’t enough to cover the cost of running and scaling large AI models.

- The company reportedly lost around $8B to operational costs in the first half of 2025, with only 5% of users paying.

- The massive spending commitments on data centers and chips are driving up costs for the company.

OpenAI has framed advertising as a way to keep ChatGPT widely accessible. In its announcement, the company said the move would allow ‘more people to benefit from our tools with fewer usage limits or without having to pay.’

Okay, so that explanation may make a bit of sense, but it’s also incomplete.

According to reporting by the Financial Times, OpenAI lost around $8B in the first six months of 2025 in operating expenses. Even though they have roughly 800M users, only about 5%, around 40M, are paid subscribers. At the same time, OpenAI now has about $14T in spending commitments tied to data centers, chips, and other infrastructure to scale its models.

It’s fair to say that running one of the world’s most widely used AI systems is expensive, and subscription revenue alone clearly isn’t covering the bill. So, this means advertising looks less like a philosophical compromise and more like a financial necessity.

Speaking shortly after the announcement at the World Economic Forum (WEF) in Davos, OpenAI CFO Sarah Friar defended this move by framing it as an access issue rather than a profit grab. ‘Our mission is artificial general intelligence for the benefit of humanity,’ she said, ‘not for the benefit of humanity who can pay.’

In this framing, it seems the company is using advertising to fund its growth without locking advanced AI behind a paywall, even if it complicates trust, privacy, and governance in the process.

Advertising Inside Conversations Changes The Data Equation

Let’s face it, advertising on websites and social media platforms is nothing new. Advertising inside a conversational AI system is. The worrying thing about this development is that ChatGPT is often responding to prompts that contain personal, emotional, or sensitive information.

Remember that trend when everyone was showing off how they were using ChatGPT as a therapist? That’s the concern here: a data governance issue.

Source: Reddit

OpenAI says ad targeting will be contextual, which means the topic of conversation will trigger ads rather than personal data. For example, someone researching a holiday destination might see travel-related ads, while a user asking about productivity might see a sponsored service.

Contextual targeting may be privacy-friendly, but conversational context is far richer than a search query or article headline. This is because prompts inside ChatGPT can reveal intent, uncertainty, and vulnerability in ways that traditional advertising platforms don’t see.

OpenAI has firmly stated that it will not share user conversations with advertisers. But even if they don’t do this, questions remain about what data OpenAI processes internally to decide which ads show up, how long that data is retained, and who has access to it.

Source: OpenAI

Conversational AI doesn’t know what users are interested in, like traditional digital advertising, but it often knows why. This makes advertising decisions far more sensitive and potentially powerful. So far, OpenAI hasn’t fully explained what information it will use to determine relevance, which is a big gap in transparency.

Safeguards, Controls, And OpenAI’s Promises

There are a couple of safeguards OpenAI is putting into place. But are they enough? OpenAI won’t show ads to accounts where the user is under 18, and it will exclude ads from appearing near sensitive topics like health and mental health.

ChatGPT determines if a user is under 18 either because the user has said so or because the system predicts it.

Source: OpenAI on X

Users will also be able to see why OpenAI is showing them an ad, dismiss it, and provide feedback. These features may seem a little like what we already see on social media platforms. But the difference is emotional context.

Seeing an ad next to a Facebook post is one thing. Seeing one after asking AI for advice about your career, finances, or well-being is quite another. OpenAI insists that ChatGPT’s responses will always be ‘driven by what’s objectively useful, never by advertising.’

Whether users continue to believe that as ads become more familiar is an open question.

Why Existing Regulations Don’t Quite Fit

Let’s dig into the regulatory perspective on all of this. Right now, ChatGPT ads are in a grey zone. Lawmakers originally wrote most advertising and digital governance frameworks with feeds, timelines, and websites in mind, not conversational systems that respond in natural language and build ongoing context with users.

- The EU’s Digital Services Act (DSA) focuses a lot on advertising transparency and disclosure. While ChatGPT’s ads are labeled, the DSA doesn’t fully account for how conversational interfaces shape trust and perception.

Source: European Commission

- The Digital Markets Act (DMA) is all about gatekeeping power and competition. If conversational AI becomes a primary interface for accessing information, questions around preferential treatment and commercial influence will become harder to ignore.

- The EU AI Act is another regulation lawmakers may need to re-examine. The law requires platforms to clearly identify AI-generated content and advertising to prevent manipulation.

OpenAI somewhat aligns with these requirements, but it’s likely regulators will still need to consider whether disclosure alone is enough when ads appear inside individual and highly personalized dialogue dialogue rather than alongside content made for wide audiences.

The Risk of What Comes Next

There’s an even bigger concern to consider here: how these ads may evolve.

Right now, OpenAI is saying there’ll be strict separation, contextual targeting only, and user control. But once their advertising turns into a serious income stream, the platform may shift to looser protocols.

The internal use of data to optimize the relevance of ads and measure their effectiveness could expand, even if OpenAI never sells the data externally.

A Regulatory Reckoning For Conversational AI

Advertising inside ChatGPT may help fund the next phase of AI development, but it’s also going to force regulators to confront what could be an entirely new reality. Governments may need to rethink how transparency, consent, and accountability will work when ads appear inside conversations rather than beside content.

That could mean new policies specifically drawn up for conversational systems, or significant changes to existing frameworks. What’s clear right now is that advertising has pushed generative AI into regulatory territory that no one has fully mapped yet.

Whether OpenAI’s promises hold and whether regulators move quickly enough to keep up will shape how commercialized and trusted conversational AI becomes.

What Happens Next for ChatGPT Ads And AI Regulation?

For now, OpenAI’s advertising experiment is relatively small, and they’re certainly wrapping it in assurances. Ads are limited, clearly labeled, and framed as a way to keep ChatGPT accessible rather than to squeeze users for data.

But that careful positioning may not last forever, especially if ads become essential to funding AI infrastructure at scale. Regulators are likely to watch this rollout closely, as conversational AI advertising sits a little awkwardly between existing frameworks, and that will likely trigger policy reviews, clarifications, or entirely new rules.

Why? Because lawmakers didn’t build current advertising rules for systems that talk. Regulators now need to decide how advertising law applies when the interface itself feels human.

If ads inside AI conversations become the norm, lawmakers will need to move fast. Otherwise, the rules governing AI monetization may be one step behind the tech that’s about to reshape how people ask, learn, and decide.

Click to expand sources

- https://www.businessinsider.com/chatgpt-ads-openai-2026-1

- https://openai.com/index/our-approach-to-advertising-and-expanding-access/

- https://www.cloudflare.com/learning/ai/what-is-large-language-model/

- https://www.ft.com/content/908dc05b-5fcd-456a-88a3-eba1f77d3ffd

- https://help.openai.com/en/articles/12652064-age-prediction-in-chatgpt

- https://quasa.io/media/openai-s-1-trillion-gamble-800-million-users-but-only-5-foot-the-bill

- https://www.reddit.com/r/ChatGPT/comments/1k1dxpp/chatgpt_has_helped_me_more_than_15_years_of/

- https://digital-strategy.ec.europa.eu/en/policies/digital-services-act

- https://digital-markets-act.ec.europa.eu/index_en

Cassy is a tech and Saas writer with over a decade of writing and editing experience spanning newsrooms, in-house teams, and agencies. After completing her postgraduate education in journalism and media studies, she started her career in print journalism and then transitioned into digital copywriting for all platforms. Read more

She has a deep interest in the AI ecosystem and how this technology is shaping the way we create and consume content, as well as how consumers use new innovations to improve their well-being. Read less

The Tech Report editorial policy is centered on providing helpful, accurate content that offers real value to our readers. We only work with experienced writers who have specific knowledge in the topics they cover, including latest developments in technology, software, hardware, and more. Our editorial policy ensures that each topic is researched and curated by our in-house editors. We maintain rigorous journalistic standards, and every article is 100% written by real authors.